Last year around February-march we started to work on a new software development project and with the effort of our energetic and enthusiastic team-members we were able to deliver its first release around May-June and the process continues from that time. It did great business and within six months of its commencement, it’s business expanded in multiple states of the United States and created a huge client-base. This unexpected and unprecedented success definitely brought a smile on our face and a feeling of immense pride and satisfaction, but it brought some challenges as well and the biggest one was the immediate need for infrastructure scaling. Actually scaling of infrastructure is a normal process and it isn’t a big deal for any veteran IT company like ours but since currently our application and its users are growing at a very rapid pace, we were uncertain whether this momentum will continue in future or we will see even more growth or some downturn. Unlike any novice organization, we didn’t want to make our infrastructure unnecessarily very large as such a large infrastructure won’t be fully utilized by our application now and will create an unnecessary burden, so we wanted to make our infrastructure agile enough that can be upgraded at any moment of time with least possible effort. Apart from this we also wanted to improve the security level of our infrastructure as well as of our application (however it was quite secure earlier also but we wanted to beef it up), we wanted to ensure the availability of our server and database all the time and we wanted to achieve all these in the most cost-effective manner and that also without hampering the performance of our application and even improving it till the possible extent.

After an ample amount of R&D for the best practices and finding out the best for our application, we successfully achieved our objective and created an entirely new infrastructure that is not only scaled up but also more agile, more efficient, more secure and more reliable. In this age of digitalization effective web presence is crucial for the success of any organization and effective web presence can only be ensured with a robust IT infrastructure. Ineffective and improperly implemented infrastructure can leave a bad impression on your clients and consequently can hamper your business. Through this blog, we want to share our research and experience, so that it can help you in scaling your infrastructure and if you want to get benefited from our experience and years of research for any kind of IT related services feel free to contact us.

What is IT infrastructure and Scaling of Infrastructure:-

Basically IT infrastructure is defined as the combined set of hardware, software, networks, etc. which is used to develop, test, monitor and host web or mobile applications(or services). Actually, one or several sets of computers are grouped together to host any application. Every computer can handle a fixed amount of load depending upon the hardware configuration of that system like RAM, ROM, Number of CPU cores, network performance, etc. To accommodate the desired load(if greater than the capacity of any single computer) we need to distribute the load on several computers. Hence several sets of servers are created and load is distributed among them using load-balancing techniques. Primarily this arrangement or architecture is known as IT infrastructure. As none web or mobile application has the constant users all the time hence load on the application varies from time to time and hence infrastructure also needs to be upgraded from time to time. The process of enhancing the load capacity of an application is known as Scaling of infrastructure.

Different methods of scaling of Infrastructure:-

Infrastructure can be scaled up in two different ways:-

- Horizontal Scaling:-

- Vertical Scaling :-

Let us understand it with some examples. Consider you and some of your friends planned for a trip and for that you booked a vehicle from a travel agent. But later a few more of your friends decided to join you for the trip, so now what will you do?Option1 – You will either ask the travel agent for a larger vehicle, Option2 – few more same vehicles or Option3 – you will cancel the previous vehicle and order again a few larger size vehicles.

Now let’s relate it with Infrastructure scaling – In this case If you opt for option1 i.e ask the travel agent for a larger vehicle then will be known as ‘Vertical Scaling’ and if you opt for option2 i.e ask the travel agent for few more same vehicles then it will be known as ‘Horizontal Scaling’. There is no rule which can define which of these methods is best as which method suits your application best entirely depends on your application itself and there is no silver bullet solution for everyone like in the above example which decision would be the best for you, will completely depend upon factors like how many more friends decided to join you later, what are the charges of previously booked vehicle and new one, what are their sitting capacity etc.So basically In vertical scaling, to increase the capacity of infrastructure we increase the number of resources(like CPUs,RAM, disk capacity etc) in the existing nodes only while in vertical scaling to increase the capacity of infrastructure we increase the number of nodes itself.A combination of both two techniques can also be a great option like in the above example option3 i.e ‘cancel the previous vehicle and order again few larger size vehicle’ and this is the option we opted for our application this time. So before starting to plan for scaling of your Infrastructure, analyze and learn more and more about your application first.

Steps to be considered while planning for infrastructure scaling :-

- Analyze your application and requirements and figure out which kind of infrastructure suits your application best.

- Learn more and more about best practices.

- Figure out what suits your requirements best.

- Create several sets of test infrastructures with different configurations based the your research in previous steps.

- Test their performance on different loads and find out which set of infrastructure gives the best result on your expected load and if several sets of infrastructure are giving similar results then find out which one is most cost-effective.

Now we will look into how we implemented infrastructure scaling in our project. But before that let’s understand our application first and our existing infrastructure.

Our Application:-

Our web-application is mainly consists of three parts:-

- Client-side Script: Client side scripts are scripts which runs on the client machine(such as web-browser). The main advantage of effective client-side scripting is that it can significantly reduce the server load and thus can help in minimizing the execution time. We are using Vue-js as our client-side scripting technology and Nginx as a web-server to host it.

- Server-side Script: Server-side scripts are scripts that does not get executed on the client machine but on the backend server. We are using Node.js as our server-side scripting technology and PM2 as a process manager to run it.

- Database: Database is used to store data. We are using MongoDB as our database.

We are hosting our web-application on AWS EC2 instances.

Our Existing Infrastructure:-

As discussed earlier we had started this project only a few months ago and this is the first infrastructure scaling of this project. Since in starting we had limited load so that so we had created a single EC2 instance for our entire web-application, i.e. we were hosting our client-side script, server-side script and database from the same EC2 instance.

Planning for our new Infrastructure:-

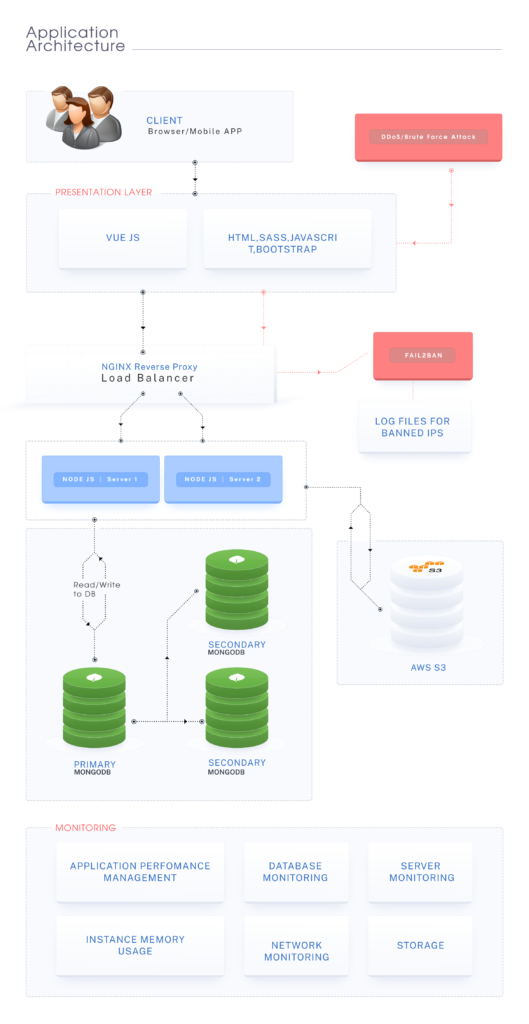

Based on the structure of our application and analyzing the problems associated with our existing infrastructure we decided, we will host our client-side scripts, server-side scripts and database from separate EC2 instances in the new infrastructure and we decided we will give access to the end-users to the Nginx (client-side scripts) server only and Nginx server will communicate with the node(server-side scripts) server internally and node server will communicate with the database internally as this architecture of infrastructure will not only distribute the load among them and reduce the load on a single EC2 instance but also enhance the level of security of our servers, application and most importantly our database. To further strengthen the security we decided to give access only to our Nginx server’s IPs to our node server and our node servers IPs to our database instances.

Since client-side scripts get executed on the client-machine(like browser etc.), so we decided to use only one EC2 instance but since server-side scripts gets executed on the server itself, so we decided to create two node servers on separate EC2 instances and distribute the load among them as in this structure if somehow one node server stops working, at least one will be working and our application will continue to serve. To ensure the availability of data all the time we decided to go for three-node MongoDB replica-set instead of a single node standalone MongoDB database and we used separate EC2 instances of each node of this replica-set.

For selecting the right EC2 instance type for this new infrastructure, we had created several sets of test infrastructures using different sets of EC2 instances in different combinations and performed load-testing on them using gatling and compared their results with that of existing infrastructure replica (as we didn’t want to disturb the existing infrastructure until we are ready to move our application to the new infrastructure). And based on the analysis of those tests we selected the most cost-effective EC2 instances which was good enough for our desired load.

New Infrastructure’s architecture :-

Tools and Techniques used :-

To make our infrastructure more efficient, more secure, more reliable, to mitigate the risk of downtime and to ensure the availability of services and data all the time we used following tools and techniques with our new infrastructure.

- Nginx load balancing :- As mentioned earlier, we created only one nginx server while we created two node servers.To distribute the loads among both node servers we used the Nginx load balancing technique.

- Node js Clustering:- Since build upon Javascript (a single threaded-programming language), Node js uses ‘Single-threaded event-loop model’ architecture to handle multiple concurrent client requests, i.e. by default node.js runs single process (utilizing single core of CPU) to execute Non-blocking IO tasks using single thread (However it utilizes other available threads of that process for Blocking IO tasks(such as database query) and complex computations), but if infrastructure has multiple core CPU then in default configuration it doesn’t run another process and hence other cores of the CPU left unused, however it can run multiple processes in a single instance also with the help of Node js clustering technique. To take advantage of the computers multi-core system we used node.js clustering. In this model several child processes are forked(equal to the number of cores of CPU) which actually handles the task and a parent process manages the distribution and execution of tasks. In this model since multiple processes run parallel to execute the task, hence it helps in reducing the execution time.

- Fail2Ban:- We have added fail2ban to prevent our web-server from unethical access, brute force attacks and DDoS attacks. DDoS attack (or distributed denial-of-service attack) is a kind of brute-force attack in which multiple compromised computer systems attack a target, such as a server, website or other network resources, and cause a denial of service to its normal users. Tools such as Fail2ban can help in protecting our server from such attacks as it continuously monitors logs files and if it detects multiple requests is coming from the same IP repeatedly then it will instantly block that IP. We have also configured it in such a way that if it detects any such IP,then it will block the IP and also inform us through email with all the details of that IP. Apart from that we have added custom filters also to it, to ban those IPs from which a user tries to log in to our web-application repeatedly, however if someone gets blocked by mistake we can unblock his/her IP manually.

- MongoDB replica-set :- As mentioned earlier,to ensure the availability of data all the time in the new infrastructure,we have used a three-node MongoDB replica-set (1 Primary node + 1 secondary node+ 1 backup cum secondary node) in place of a single node standalone MongoDB database. To further deal with any unexpected situation we created two nodes of the MongoDB replica-set in the same data center in which our both server lies and one in different data center, so that if somehow the entire data center crashes or goes down(however it isn’t expected from AWS) then also our data remains safe. Apart from that we are also taking database backup in S3 bucket in every 3 hours using Cron jobs.

- Monitoring:- For monitoring the services and instances. We are using New Relic. New Relic is a monitoring tool that provides real-time and historical insights into the performance and reliability of web and mobile applications.It provides several tools to monitor the infrastructure, load, performance, etc of web and mobile applications. We are using ‘New Relic Infrastructure’ to monitor the performance, load, CPU uses, storage etc. of all our server instances and MongoDB replica-set and ‘New Relic APM’ to monitor the performance of our application.

- Gatling:- Gatling is a performance and load testing tool. We are using this tool to test the performance of our new-infrastructure and existing infrastructure. So that we can compare both results and figure-out is really our new infrastructure’s performance improved or it worsens. It also helped us in selecting the right ec2 instance for our new infrastructure. We performed several tests using different sets of infrastructure and for different scenarios. Details reports of test results are published in the last part of the document.

- Monit:- Monit is a utility for managing and monitoring processes, programs, files, directories and filesystems on a Unix system. Monit conducts automatic maintenance and repair and can execute meaningful causal actions in error situations. E.g. Monit can start a process if it does not run, restart a process if it does not respond and stop a process if it uses too many resources. We are using Monit to automatically restart Nginx and MongoDB in case it shuts down somehow or on system reboot.

- PM2:- PM2 is a production process manager for Node.js applications with a built-in load balancer. It allows you to keep applications alive forever, to reload them without the downtime and to facilitate common system admin tasks.

- PM2 will automatically restart your application if it crashes.

- PM2 will keep a log of your unhandled exceptions.

- PM2 can ensure that any applications it manages restart when the server reboots.

We hope it will help you in understanding application architecture and infrastructure scaling and will guide you in planning your infrastructure scaling.